Kubeflow#

Starting with the release of Kubeflow 1.7, BentoML provides a native integration with Kubeflow. This integration allows you to package models trained in Kubeflow Notebooks or Pipelines as Bentos, and deploy them as microservices in the Kubernetes cluster through BentoML’s cloud native components and custom resource definitions (CRDs). This documentation provides a comprehensive guide on how to use BentoML and Kubeflow together to streamline the process of deploying models at scale.

Prerequisites#

Install Kubeflow and BentoML resources to the Kubernetes cluster. See Kubeflow and BentoML manifests installation guides for details.

After BentoML Kubernetes resources are installed successfully, you should have the following CRDs in the namespace.

kubectl -n kubeflow get crds | grep bento

bentodeployments.serving.yatai.ai 2022-12-22T18:46:46Z

bentoes.resources.yatai.ai 2022-12-22T18:46:47Z

bentorequests.resources.yatai.ai 2022-12-22T18:46:47Z

Kubernetes Components and CRDs#

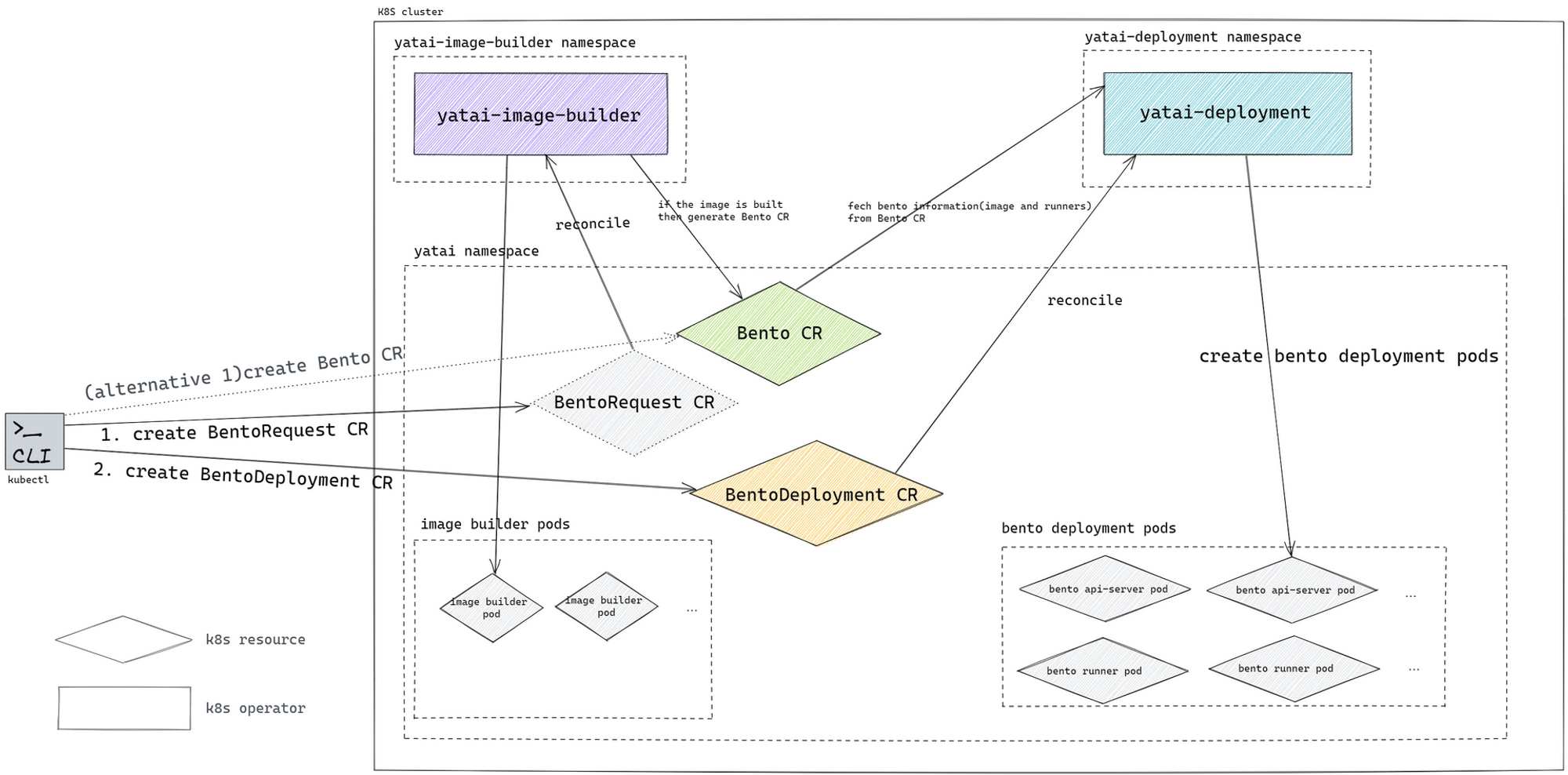

BentoML offers three custom resource definitions (CRDs) in the Kubernetes cluster through Yatai.

CRD |

Component |

Description |

|---|---|---|

|

Describes a bento’s OCI image build request and to describe how to generate a Bento CR. |

|

|

Describes a bento’s metadata. |

|

|

Describes a bento’s deployment configuration. |

Workflow on Notebook#

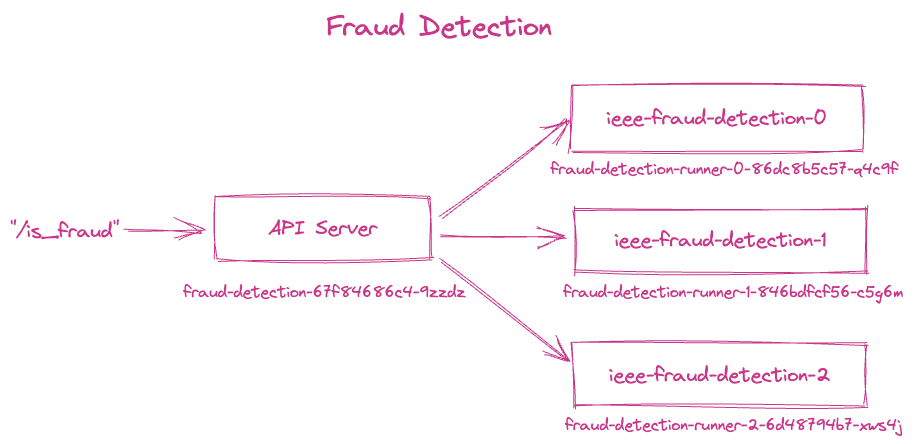

In this example, we will train three fraud detection models using the Kubeflow notebook and the Kaggle IEEE-CIS Fraud Detection dataset. We will then create a BentoML service that can simultaneously invoke all three models and return a decision on whether a transaction is fraudulent and build it into a Bento. We will showcase two deployment workflows using BentoML’s Kubernetes operators: deploying directly from the Bento, and deploying from an OCI image built from the Bento.

See the BentoML Fraud Detection Example for a detailed workflow from model training to end-to-end deployment on Kubernetes.